Estimating Garment Patterns from Static Scan Data

Abstract

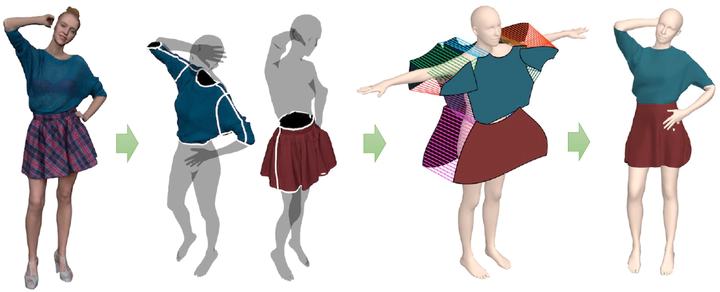

The acquisition of highly detailed static 3D scan data for people in clothing is becoming widely available. Since 3D scan data is given as a single mesh without semantic separation, in order to animate the data, it is necessary to model shape and deformation behaviour of individual body and garment parts. This paper presents a new method for generating simulation-ready garment models from 3D static scan data of clothed humans. A key contribution of our method is a novel approach to segmenting garments by finding optimal boundaries between the skin and garment. Our boundary-based garment segmentation method allows for stable and smooth separation of garments by using an implicit representation of the boundary and its optimization strategy. In addition, we present a novel framework to construct a 2D pattern from the segmented garment and place it around the body for a draping simulation. The effectiveness of our method is validated by generating garment patterns for a number of scan data.