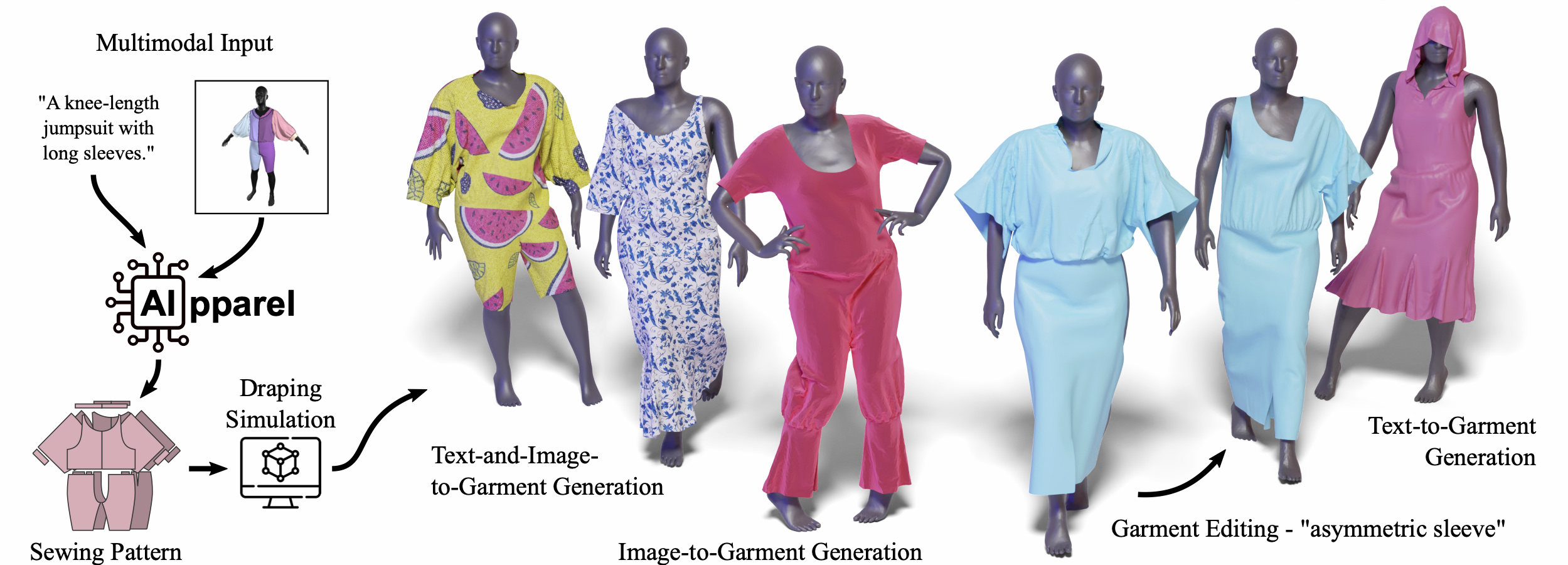

AIpparel is a multimodal foundation model for digital garments trained by fine-tuning a large multimodal model on a custom sewing pattern dataset using a novel tokenization scheme for these patterns.

AIpparel is a multimodal foundation model for digital garments trained by fine-tuning a large multimodal model on a custom sewing pattern dataset using a novel tokenization scheme for these patterns.Abstract

Apparel is essential to human life, offering protection, mirroring cultural identities, and showcasing personal style. Yet, the creation of garments remains a time-consuming process, largely due to the manual work involved in designing them. To simplify this process, we introduce AIpparel, a large multimodal model for generating and editing sewing patterns. Our model fine-tunes state-of-the-art large multimodal models (LMMs) on a custom-curated large-scale dataset of over 120,000 unique garments, each with multimodal annotations including text, images, and sewing patterns. Additionally, we propose a novel tokenization scheme that concisely encodes these complex sewing patterns so that LLMs can learn to predict them efficiently. AIpparel achieves state-of-the-art performance in single-modal tasks, including text-to-garment and image-to-garment prediction, and it enables novel multimodal garment generation applications such as interactive garment editing.